Over the past few months, in collaboration with Harvard University’s Technology for Social Good (T4SG) team, we have tested the potential of artificial intelligence (AI) to improve how vulnerable populations find and connect with social services and benefits.

We know that AI has tremendous potential in the commercial sector, but can it really enhance the nonprofit work we do at One Degree? This project gave us an opportunity to experiment with AI and test how it might be used to improve the way we connect people to the resources they need. In this blog post, we will walk you through the experiment, its early results, and what comes next as we evaluate its potential value for our future work.

Why Use AI? Why is it Valuable?

The people we serve often struggle to find the right services at the right time. With thousands of resources available, each with its own eligibility requirements, it’s always a challenge to match people with the right opportunities and support.

AI has the potential to simplify this process, allowing for quicker, more accurate resource matching, without the information overload that can lead to confusion and frustration. But as with any emerging technology, we must test and evaluate whether AI will truly benefit the communities we serve before fully adopting it.

At One Degree, we are committed to using technology to create a more equitable social care ecosystem, and this must be done thoughtfully. That is why this AI experiment was designed to help us test effectiveness, reliability, and trust in real-world scenarios—without jumping into broad implementation right away.

The AI Chatbot Experiment: Function-Calling Method vs. Retrieval-Augmented Generation

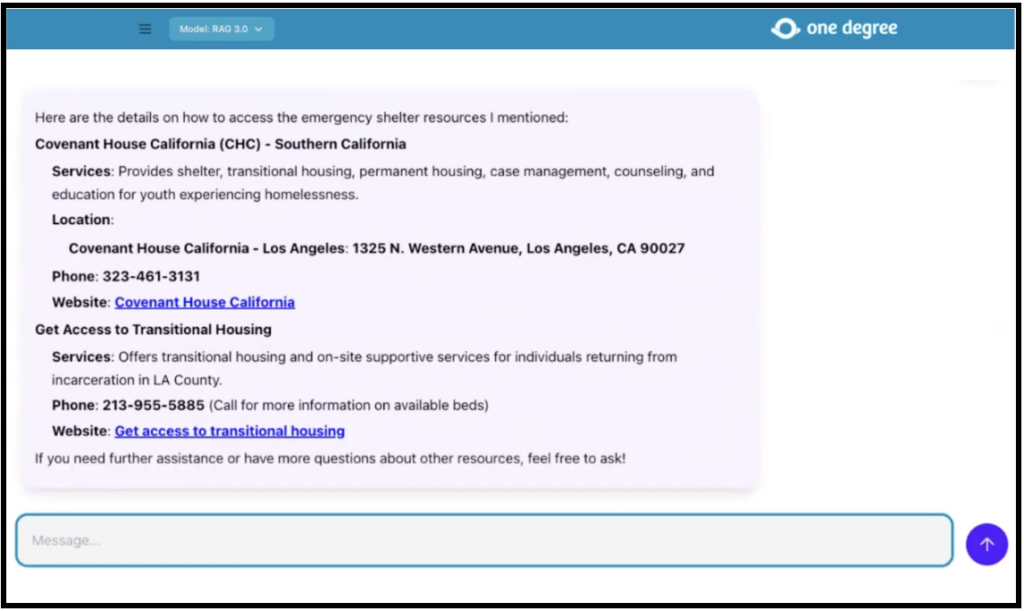

In collaboration with the team at Harvard, we built a custom AI chatbot for internal testing. The chatbot took in user queries, such as questions or requests for specific types of support, and then recommended resources based on the user’s circumstances, such as their location, eligibility criteria, and specific needs related to housing, food assistance, healthcare, employment, or legal aid.

We conducted two primary tests: one using a function-calling method, which involved taking user queries, converting them into structured database queries, and retrieving responses directly from our system. The second test utilized a retrieval-augmented generation (RAG) model, which transformed both resources and user queries into numerical vector representations. By leveraging cosine similarity, the AI was able to match user needs with the most relevant resources efficiently.

The RAG model demonstrated significantly better performance in response accuracy and speed, making it the more viable solution moving forward. While the AI showed promise in speeding up searches and improving accuracy, it also revealed challenges such as gaps in data quality and the need for clearer confidence indicators in AI-driven recommendations. Take a look at the AI Chatbot here:

What Did We Learn and What’s Next?

Key Takeaways:

- AI shows promise for improving accuracy: Early testing indicates that AI can help provide more personalized and relevant resource recommendations, based on individual user needs. Factors such as location, eligibility, and specific needs are all taken into consideration.

- Efficiency gains are possible, but require investment: The AI tool significantly reduced the time required for staff to find relevant resources, cutting search time down and allowing staff to focus on helping people with these resources. While AI improved search efficiency for staff, ongoing refinements are needed to ensure reliable, high-quality recommendations.

- Trust and transparency are crucial: Users must feel confident in the AI’s ability to recommend accurate and relevant resources. Building trust with people seeking services will be key.

Next Steps: Finding Funders and Strategic Partners

- Refining the AI tool: We will continue to refine the chatbot, improving its accuracy and usability based on internal feedback.

- Testing with trusted partners: After further internal testing, we plan to beta-test the AI tool with 10-30 community-based organizations (CBOs) in Los Angeles. This will help us gauge how the tool performs with external users and gather insights on its real-world value.

- Securing innovation funding: To scale and refine the AI tool, we need innovation funding from funders who believe in the power of AI for social good. Philanthropic organizations, venture philanthropy, and tech-driven funders will be critical partners in bringing this vision to life.

- Building partnerships with organizations with quality databases: The success of AI-driven solutions depends on high-quality, well-structured resource data. We are actively seeking partnerships with other nonprofits and stakeholders who can contribute to and benefit from this initiative.

- Evaluating integration into One Degree’s core platform: At this stage, we are still assessing whether AI is the right fit for broader integration into One Degree’s offerings, including 1degree.org. We are carefully evaluating the results of our testing before deciding how to move forward with AI as part of our service delivery.

We’re excited about the potential of AI, but we’re taking a thoughtful, measured approach to ensure that any technology we adopt genuinely benefits the communities we serve. We will continue to test, learn, and adapt as we move forward, and we’ll keep you updated on our findings. A huge thank you to the Harvard T4SG team for their time, expertise, and dedication to this project! It has been an enormously valuable learning experience to collaborate with such a talented team of technologists — special thanks to our exceptional project leads Sabrina Hu and Christopher Perez.

We’re going to continue sharing our insights as we experiment and implement AI, and we hope the lessons we have learned from this experiment can be valuable to other nonprofits and organizations interested in using AI for social care.

If you are interested in investing in AI in the social sector, let us connect to explore the possibilities.